In 2013, Sir Harry Kroto, Nobel Prize winner in Chemistry, contacted Professor Thad Starner with an idea for Google Glass . Sir Harry was having trouble communicating with one of his colleagues who was losing his hearing. Perhaps Google’s wearable computer could be used to display a transcription of what he was saying so that his colleague could better follow the conversation? Thad partnered with Professor Jim Foley to give the idea a try. Soon Georgia Tech released “Captioning on Glass,” leveraging Google’s new speech transcription APIs, for users to try. While the system worked well for short conversations, Glass's display, mounted high above line-of-sight, was intended for quick glances, not constant reading while trying to maintain eye contact.

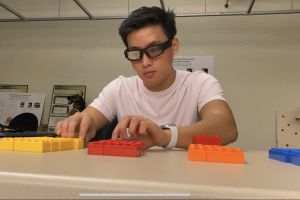

Since then, several live captioning apps have been released on mobile phones (e.g. Google's Live Transcribe), and speech recognition has improved significantly. In what situations is it advantageous to display the transcription on a head worn display (HWD) versus a mobile phone? To establish good experimental designs before recruiting the next set of deaf and hard of hearing participants, recent studies compared the use of a HWD versus a mobile phone for captioning while a user’s hearing is blocked using noise-cancelling headphones. To compare the efficacy of the Vuzix Blade HWD to a mobile phone, eight participants attempted a toy block assembly task guided bycaptioning of a live instructor’s speech. The HWD had higher mental, physical and overall workload scores than the phone, potentially due to the blurriness of the HWD’s image. Re-running the experiment with Google Glass EE2 versus a mobile phone on another twelve participants resulted in the HWD having higher mental, effort, frustration, and overall workload scores than the phone. While Glass has a much sharper image than the Vuzix, the speech recognition quality was significantly worse. However, nine of the twelve participants stated that they would prefer Glass for the task if the speech recognition was better. Current efforts are refining the study using simulated speech recognition with perfect accuracy to provide a fair usability test across devices. These results are promising, and we are currently recruiting participants who are deaf and hard of hearing.

The Contextual Computing Group (CCG) creates wearable and ubiquitous computing technologies using techniques from artificial intelligence (AI) and human-computer interaction (HCI). We focus on giving users superpowers through augmenting their senses, improving learning, and providing intelligent assistants in everyday life. Members' long-term projects have included creating wearable computers (Google Glass), teaching manual skills without attention (Passive Haptic Learning), improving hand sensation after traumatic injury (Passive Haptic Rehabilitation), educational technology for the Deaf community, and communicating with dogs and dolphins through computer interfaces (Animal Computer Interaction).