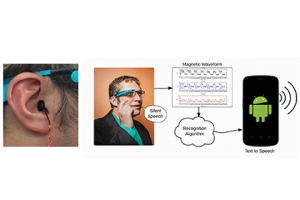

Voice control provides hands-free access to computing, but there are many situations where audible speech is not appropriate. Most

unvoiced speech text entry systems can not be used while on-the-go due to movement artifacts. SilentSpeller enables mobile silent

texting using a dental retainer with capacitive touch sensors to track tongue movement. Users type by spelling words without voicing.

In offline isolated word testing on a 1164-word dictionary, SilentSpeller achieves an average 97% character accuracy. 97% offline

accuracy is also achieved on phrases recorded while walking or seated. Live text entry achieves up to 53 words per minute and 90%

accuracy, which is competitive with expert text entry on mini-QWERTY keyboards without encumbering the hands.

The Contextual Computing Group (CCG) creates wearable and ubiquitous computing technologies using techniques from artificial intelligence (AI) and human-computer interaction (HCI). We focus on giving users superpowers through augmenting their senses, improving learning, and providing intelligent assistants in everyday life. Members' long-term projects have included creating wearable computers (Google Glass), teaching manual skills without attention (Passive Haptic Learning), improving hand sensation after traumatic injury (Passive Haptic Rehabilitation), educational technology for the Deaf community, and communicating with dogs and dolphins through computer interfaces (Animal Computer Interaction).